Economies of Scale

How Deep Learning is Changing Real-World Computer Vision

The expansion of computer-vision-based systems and applications is enabled by many factors, including advances in processors, sensors and development tools. But, arguably, the single most important thing driving the proliferation of computer vision is deep learning.

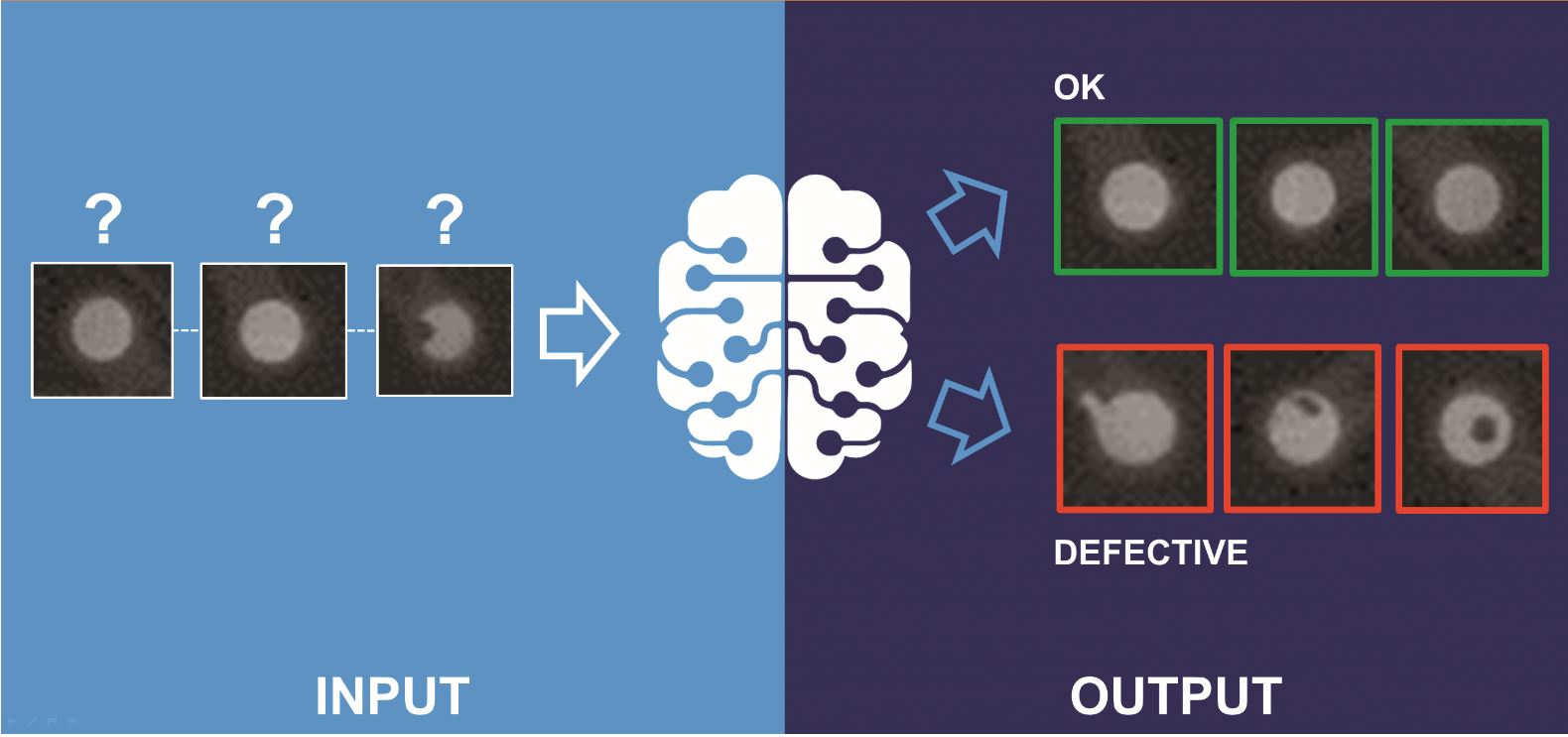

With deep learning we tend to re-use a relatively small handful of algorithms across a wide range of applications and imaging conditions. This has two important consequences. (Image: MVTec Software GmbH)

The fact that deep learning-based visual perception works extremely well – routinely achieving better results than older, hand-crafted algorithms – has been widely discussed. What is less widely understood, but equally important, is how the rise of deep learning is fundamentally changing the process and economics of developing solutions and building-block technologies for commercial computer vision applications. Prior to the widespread use of deep learning in commercial computer vision applications, developers created highly complex, unique algorithms for each application. These algorithms were usually highly tuned to the specifics of the application, including factors such as image sensor characteristics, camera position, and the nature of the background behind the objects of interest. Developing, testing and tuning these algorithms often consumed tens or even hundreds of person-years of work. Even if a company was fortunate enough to have enough people available with the right skills, the magnitude of the effort required meant that only a tiny fraction of potential computer vision applications could actually be addressed.

Less diverse algorithms

With deep learning, in contrast, we tend to re-use a relatively small handful of algorithms across a wide range of applications and imaging conditions. Instead of inventing new algorithms, we re-train existing, proven algorithms. As a consequence, the algorithms being deployed in commercial computer vision systems are becoming much less diverse. This has two important consequences:

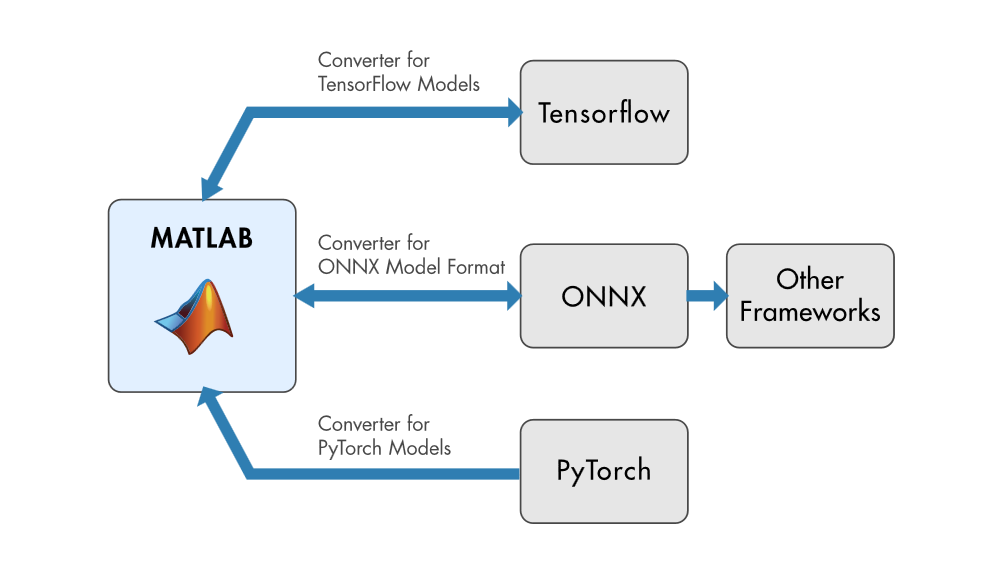

– First, the economics of commercial computer vision applications and building-block technologies have fundamentally shifted. Take processors, for example. Five or ten years ago, developing a specialized processor to deliver significantly improved performance and efficiency on a wide range of computer vision tasks was nearly impossible, due to the extreme diversity of computer vision algorithms. Today, with the focus mainly on deep learning, it’s much practical to create a specialized processor that accelerates vision workloads – and it’s much easer for investors to see a path for such a processor to sell in large volumes, serving a wide range of applications.

– Second, the nature of computer vision algorithm development has changed. Instead of investing years of effort devising novel algorithms, increasingly these days we select among proven algorithms from the research literature, perhaps tweaking them a Bit for our needs. So, in commercial applications much less effort goes into designing algorithms. But deep learning algorithms require lots of data for training and validation. And not just any data. The data must be carefully curated for the algorithms to achieve high levels of accuracy. So, there’s been a substantial shift in the focus of algorithm-related work in commercial computer vision applications, away from devising unique algorithms and towards obtaining the right quantities of the right types of training data.

The right training data

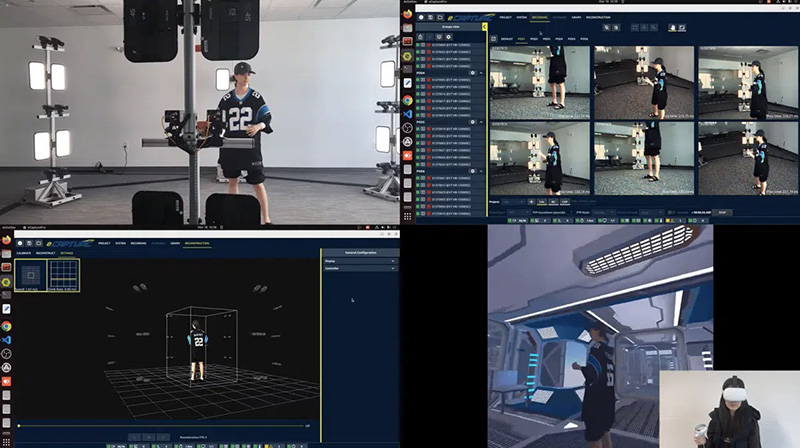

In my consulting firm, BDTI, we’ve seen this very clearly in the nature of the projects our customers bring us. A recent project illustrates this. The customer, a consumer products manufacturer, wanted to create a prototype product incorporating vision-based object classification in three months. The initial target was to identify 20 classes. Hardware design was not an issue – sensors and processors were quickly identified and selected. Algorithm development also moved speedily. The key challenge was data. To achieve acceptable accuracy, the system required a large quantity of high-quality, diverse data. There was no suitable data available, so the data set had to be created from scratch. But not just any data will do. Our first step was to design a data capture rig that would produce the right kinds of images. Here, an understanding of camera characteristics, perspective, and lighting led to detailed specifications for the data capture rig.

The difficulty in creating this data set was compounded by the requirement that the system differentiate between classes that are difficult to for humans to distinguish. In this type of situation, curation of training and validation data is critical to achieving acceptable accuracy. For this project, in addition to specifying the data capture rig, we took several steps to ensure success. For example, we provided the customer with detailed instructions for capturing data, including varying perspective and illumination in specific ways. We also specified employing different personnel to prepare and position the items for capture and requested that domain experts provide input to ensure that the data was realistic. The captured data was then carefully reviewed, with unsuitable images rejected.

Summary

The bottom line here, which shouldn’t surprise any of us, is that while deep learning is an amazing, powerful technology, it’s not a magic wand. There’s still lots of work required to field a robust computer vision solution – and it’s largely a different type of work from what was required using traditional vision algorithms.